Mathematics for Machine Learning: Linear Algebra

数学在机器学习领域的应用一:线性代数

开始学习

总是觉得自己数学有一点差,可能是因为上大学学习的时候题目做的比较少,我的脑子又不太灵光,因此一直不能很好的理解数学相关的一些公式、定理等,平时编程的时候尽量找简单的方法绕开复杂的数学公式。假期有时间了,试一下帝国理工的线性代数课程,注重记录,注重理解。这也是第一次看没有中文字幕的全英文课。加油!

课程简介

In this course on Linear Algebra we look at what linear algebra is and how it relates to vectors and matrices. Then we look through what vectors and matrices are and how to work with them, including the knotty problem of eigenvalues and eigenvectors, and how to use these to solve problems. Finally we look at how to use these to do fun things with datasets - like how to rotate images of faces and how to extract eigenvectors to look at how the Pagerank algorithm works.

Since we’re aiming at data-driven applications, we’ll be implementing some of these ideas in code, not just on pencil and paper. Towards the end of the course, you’ll write code blocks and encounter Jupyter notebooks in Python, but don’t worry, these will be quite short, focussed on the concepts, and will guide you through if you’ve not coded before.

At the end of this course you will have an intuitive understanding of vectors and matrices that will help you bridge the gap into linear algebra problems, and how to apply these concepts to machine learning.

什么是线性代数

Linear algebra is a mathematical system for manipulating vectors in the spaces described by vectors.

Linear algebra is linear, because it just takes input values, and multiplies them by constants, everything is linear.

Linear algebra is algebra, that is it’s a notation describing mathematical objects and a system of manipulating those notations.

How vectors are transformed by matrices is the heart of linear algebra.

为什么我们需要线性代数?

- 让计算机快速求解多元方程组

例如:多元方程组,可以转换为

,然后进行求解。

- 为数据拟合方程

随着神经网络和机器学习的发展,并不仅仅是拟合方程,最好还能在已有方程曲线的前提下,找到最佳的拟合参数,从而更适用于当前的数据。描述一个方程的各种参数可以使用一个向量来表示,我们希望通过某种方式,数据科学或者机器学习的方式来找到最佳的拟合参数。

向量(Vector)

在计算机科学中,向量被认为是描述一个物体的属性的集合。

向量的基本操作

向量有两种操作:向量与向量之间的加法,以及向量与标量之间的乘法。

向量与向量之间的加法满足结合律(associativity)。

向量与标量之间的乘法,要将标量与向量中的每一个属性相乘

向量的其他运算

如果不以坐标系的角度去观察向量,那么一个向量由两个属性构成:向量的方向和向量的模长

向量的模长指的是向量各组成成分的平方和开根号

向量的点乘指的是向量对应位置的数值相乘之和,满足交换律(commutative)

同时满足向量的加法分配律(distributive over addition),即

向量与标量相乘满足结合律和交换律,即

向量模长与点乘之间的关系:向量自身的点乘与模长的平方相等,即

向量的余弦定理:

向量投影(projection):

向量投影是一个标量,但是,如果需要投影向量的方向,直接与被投影的单位向量相乘即可。

向量的坐标系

两个不共线的向量可以确定一个坐标系(coordinate system)。要描述一个向量,首先要定义一个坐标系,决定坐标系的是基向量。

基向量是

维的向量彼此之间不线性相关,也就是线性独立的

维向量。

- 可以扩展到整个空间。

- 空间是

维的。

虽然并不要求基向量正交,但是如果它们正交,会为解决数学问题带来很大的方便。

如果二维的基向量互相垂直,转换坐标系只需将向量投影到转换后的基向量,计算数值即可。

设原始坐标系

首先验证

然后将待转换的向量

从而得出

找到一个合适的坐标系,帮助我们解决数学问题,是非常重要的。

矩阵(Matrices)

矩阵与向量相乘,相当于将向量转换到不同的坐标系。

矩阵的乘法满足结合律,但是不满足交换律.

如

如

通过矩阵的转换实际上可以看作不同转换向量之间的和。

如果我们对

单位矩阵(identity matrix)不对向量做任何变换

设单位矩阵

根据逆矩阵的定义,

因此

通过初等行变换求解逆矩阵:

对于二维矩阵

二维行列式(determinant):

行列式为0的矩阵,维度不满足当前矩阵的维度,因此在矩阵操作前要首先检查行列式。

矩阵的转置:

爱因斯坦求和约定(Einstein summation convention)

设

则

如

因此

矩阵坐标系的转换

设原始坐标系

如果将

反之,将原始坐标系中的向量转换到

如果基向量是正交的,可以使用投影来实现坐标系的转换:

设原始坐标系

则将

施密特正交化(Gram–Schmidt process)

正交的基向量会给我们解决问题带来很多的方便,需要一种方法将基向量转换为正交的基向量。

设原始的

特征问题(Eigenproblems)

对特征向量的直观感受:在进行变换的时候方向仍然保持不变的向量。

求特征值,即

对角矩阵(diagonal matrix)会使矩阵的乘法变得更加容易,

因此可以通过特征值与特征向量的转换,将矩阵转化为对角矩阵,然后求矩阵的幂。

设特征向量

矩阵

编程练习

判断一个矩阵是奇异矩阵(singular)还是非奇异矩阵

# GRADED FUNCTION

import numpy as np

# Our function will go through the matrix replacing each row in order turning it into echelon form.

# If at any point it fails because it can't put a 1 in the leading diagonal,

# we will return the value True, otherwise, we will return False.

# There is no need to edit this function.

def isSingular(A) :

B = np.array(A, dtype=np.float_) # Make B as a copy of A, since we're going to alter it's values.

try:

fixRowZero(B)

fixRowOne(B)

fixRowTwo(B)

fixRowThree(B)

except MatrixIsSingular:

return True

return False

# This next line defines our error flag. For when things go wrong if the matrix is singular.

# There is no need to edit this line.

class MatrixIsSingular(Exception): pass

# For Row Zero, all we require is the first element is equal to 1.

# We'll divide the row by the value of A[0, 0].

# This will get us in trouble though if A[0, 0] equals 0, so first we'll test for that,

# and if this is true, we'll add one of the lower rows to the first one before the division.

# We'll repeat the test going down each lower row until we can do the division.

# There is no need to edit this function.

def fixRowZero(A) :

if A[0,0] == 0 :

A[0] = A[0] + A[1]

if A[0,0] == 0 :

A[0] = A[0] + A[2]

if A[0,0] == 0 :

A[0] = A[0] + A[3]

if A[0,0] == 0 :

raise MatrixIsSingular()

A[0] = A[0] / A[0,0]

return A

# First we'll set the sub-diagonal elements to zero, i.e. A[1,0].

# Next we want the diagonal element to be equal to one.

# We'll divide the row by the value of A[1, 1].

# Again, we need to test if this is zero.

# If so, we'll add a lower row and repeat setting the sub-diagonal elements to zero.

# There is no need to edit this function.

def fixRowOne(A) :

A[1] = A[1] - A[1,0] * A[0]

if A[1,1] == 0 :

A[1] = A[1] + A[2]

A[1] = A[1] - A[1,0] * A[0]

if A[1,1] == 0 :

A[1] = A[1] + A[3]

A[1] = A[1] - A[1,0] * A[0]

if A[1,1] == 0 :

raise MatrixIsSingular()

A[1] = A[1] / A[1,1]

return A

# This is the first function that you should complete.

# Follow the instructions inside the function at each comment.

def fixRowTwo(A) :

# Insert code below to set the sub-diagonal elements of row two to zero (there are two of them).

A[2] = A[2] - A[2,0] * A[0]

A[2] = A[2] - A[2,1] * A[1]

# Next we'll test that the diagonal element is not zero.

if A[2,2] == 0 :

# Insert code below that adds a lower row to row 2.

A[2] = A[2] + A[3]

# Now repeat your code which sets the sub-diagonal elements to zero.

A[2] = A[2] - A[2,0] * A[0]

A[2] = A[2] - A[2,1] * A[1]

if A[2,2] == 0 :

raise MatrixIsSingular()

# Finally set the diagonal element to one by dividing the whole row by that element.

A[2] = A[2] / A[2,2]

return A

# You should also complete this function

# Follow the instructions inside the function at each comment.

def fixRowThree(A) :

# Insert code below to set the sub-diagonal elements of row three to zero.

A[3] = A[3] - A[3,0] * A[0]

A[3] = A[3] - A[3,1] * A[1]

A[3] = A[3] - A[3,2] * A[2]

# Complete the if statement to test if the diagonal element is zero.

if A[3,3] == 0:

raise MatrixIsSingular()

# Transform the row to set the diagonal element to one.

A[3] = A[3] / A[3,3]

return AA = np.array([

[2, 0, 0, 0],

[0, 3, 0, 0],

[0, 0, 4, 4],

[0, 0, 5, 5]

], dtype=np.float_)

isSingular(A)

A = np.array([

[0, 7, -5, 3],

[2, 8, 0, 4],

[3, 12, 0, 5],

[1, 3, 1, 3]

], dtype=np.float_)

isSingular(A)

fixRowZero(A)

fixRowOne(A)

fixRowTwo(A)

fixRowThree(A)施密特正交化

# GRADED FUNCTION

import numpy as np

import numpy.linalg as la

verySmallNumber = 1e-14 # That's 1×10⁻¹⁴ = 0.00000000000001

# Our first function will perform the Gram-Schmidt procedure for 4 basis vectors.

# We'll take this list of vectors as the columns of a matrix, A.

# We'll then go through the vectors one at a time and set them to be orthogonal

# to all the vectors that came before it. Before normalising.

# Follow the instructions inside the function at each comment.

# You will be told where to add code to complete the function.

def gsBasis4(A) :

B = np.array(A, dtype=np.float_) # Make B as a copy of A, since we're going to alter it's values.

# The zeroth column is easy, since it has no other vectors to make it normal to.

# All that needs to be done is to normalise it. I.e. divide by its modulus, or norm.

B[:, 0] = B[:, 0] / la.norm(B[:, 0])

# For the first column, we need to subtract any overlap with our new zeroth vector.

B[:, 1] = B[:, 1] - B[:, 1] @ B[:, 0] * B[:, 0]

# If there's anything left after that subtraction, then B[:, 1] is linearly independant of B[:, 0]

# If this is the case, we can normalise it. Otherwise we'll set that vector to zero.

if la.norm(B[:, 1]) > verySmallNumber :

B[:, 1] = B[:, 1] / la.norm(B[:, 1])

else :

B[:, 1] = np.zeros_like(B[:, 1])

# Now we need to repeat the process for column 2.

# Insert two lines of code, the first to subtract the overlap with the zeroth vector,

# and the second to subtract the overlap with the first.

B[:, 2] = B[:, 2] - B[:, 2] @ B[:, 0] * B[:, 0]

B[:, 2] = B[:, 2] - B[:, 2] @ B[:, 1] * B[:, 1]

# Again we'll need to normalise our new vector.

# Copy and adapt the normalisation fragment from above to column 2.

if la.norm(B[:, 2]) > verySmallNumber :

B[:, 2] = B[:, 2] / la.norm(B[:, 2])

else :

B[:, 2] = np.zeros_like(B[:, 2])

# Finally, column three:

# Insert code to subtract the overlap with the first three vectors.

B[:, 3] = B[:, 3] - B[:, 3] @ B[:, 0] * B[:, 0]

B[:, 3] = B[:, 3] - B[:, 3] @ B[:, 1] * B[:, 1]

B[:, 3] = B[:, 3] - B[:, 3] @ B[:, 2] * B[:, 2]

# Now normalise if possible

if la.norm(B[:, 3]) > verySmallNumber :

B[:, 3] = B[:, 3] / la.norm(B[:, 3])

else :

B[:, 3] = np.zeros_like(B[:, 3])

# Finally, we return the result:

return B

# The second part of this exercise will generalise the procedure.

# Previously, we could only have four vectors, and there was a lot of repeating in the code.

# We'll use a for-loop here to iterate the process for each vector.

def gsBasis(A) :

B = np.array(A, dtype=np.float_) # Make B as a copy of A, since we're going to alter it's values.

# Loop over all vectors, starting with zero, label them with i

for i in range(B.shape[1]) :

# Inside that loop, loop over all previous vectors, j, to subtract.

for j in range(i) :

# Complete the code to subtract the overlap with previous vectors.

# you'll need the current vector B[:, i] and a previous vector B[:, j]

B[:, i] = B[:, i] - B[:, i] @ B[:, j] * B[:, j]

# Next insert code to do the normalisation test for B[:, i]

if la.norm(B[:, i]) > verySmallNumber :

B[:, i] = B[:, i] / la.norm(B[:, i])

else :

B[:, i] = np.zeros_like(B[:, i])

# Finally, we return the result:

return B

# This function uses the Gram-schmidt process to calculate the dimension

# spanned by a list of vectors.

# Since each vector is normalised to one, or is zero,

# the sum of all the norms will be the dimension.

def dimensions(A) :

return np.sum(la.norm(gsBasis(A), axis=0))V = np.array([[1,0,2,6],

[0,1,8,2],

[2,8,3,1],

[1,-6,2,3]], dtype=np.float_)

gsBasis4(V)

# Once you've done Gram-Schmidt once,

# doing it again should give you the same result. Test this:

U = gsBasis4(V)

gsBasis4(U)

# Try the general function too.

gsBasis(V)

# See what happens for non-square matrices

A = np.array([[3,2,3],

[2,5,-1],

[2,4,8],

[12,2,1]], dtype=np.float_)

gsBasis(A)

dimensions(A)

B = np.array([[6,2,1,7,5],

[2,8,5,-4,1],

[1,-6,3,2,8]], dtype=np.float_)

gsBasis(B)

dimensions(B)

# Now let's see what happens when we have one vector that is a linear combination of the others.

C = np.array([[1,0,2],

[0,1,-3],

[1,0,2]], dtype=np.float_)

gsBasis(C)

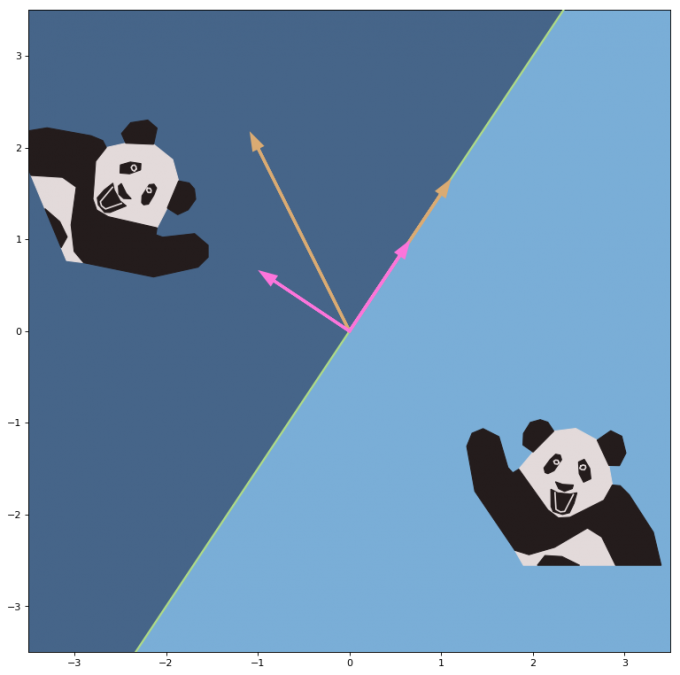

dimensions(C)镜面投影

# PACKAGE

# Run this cell first once to load the dependancies.

import numpy as np

from numpy.linalg import norm, inv

from numpy import transpose

from readonly.bearNecessities import *

# GRADED FUNCTION

# You should edit this cell.

# In this function, you will return the transformation matrix T,

# having built it out of an orthonormal basis set E that you create from Bear's Basis

# and a transformation matrix in the mirror's coordinates TE.

def build_reflection_matrix(bearBasis) : # The parameter bearBasis is a 2×2 matrix that is passed to the function.

# Use the gsBasis function on bearBasis to get the mirror's orthonormal basis.

E = gsBasis(bearBasis)

# Write a matrix in component form that performs the mirror's reflection in the mirror's basis.

# Recall, the mirror operates by negating the last component of a vector.

# Replace a,b,c,d with appropriate values

TE = np.array([[1, 0],

[0, -1]])

# Combine the matrices E and TE to produce your transformation matrix.

T = E @ TE @ inv(E)

# Finally, we return the result. There is no need to change this line.

return T

# First load Pyplot, a graph plotting library.

%matplotlib inline

import matplotlib.pyplot as plt

# This is the matrix of Bear's basis vectors.

# (When you've done the exercise once, see what happns when you change Bear's basis.)

bearBasis = np.array(

[[1, -1],

[1.5, 2]])

# This line uses your code to build a transformation matrix for us to use.

T = build_reflection_matrix(bearBasis)

# Bear is drawn as a set of polygons, the vertices of which are placed as a matrix list of column vectors.

# We have three of these non-square matrix lists: bear_white_fur, bear_black_fur, and bear_face.

# We'll make new lists of vertices by applying the T matrix you've calculated.

reflected_bear_white_fur = T @ bear_white_fur

reflected_bear_black_fur = T @ bear_black_fur

reflected_bear_face = T @ bear_face

# This next line runs a code to set up the graphics environment.

ax = draw_mirror(bearBasis)

# We'll first plot Bear, his white fur, his black fur, and his face.

ax.fill(bear_white_fur[0], bear_white_fur[1], color=bear_white, zorder=1)

ax.fill(bear_black_fur[0], bear_black_fur[1], color=bear_black, zorder=2)

ax.plot(bear_face[0], bear_face[1], color=bear_white, zorder=3)

# Next we'll plot Bear's reflection.

ax.fill(reflected_bear_white_fur[0], reflected_bear_white_fur[1], color=bear_white, zorder=1)

ax.fill(reflected_bear_black_fur[0], reflected_bear_black_fur[1], color=bear_black, zorder=2)

ax.plot(reflected_bear_face[0], reflected_bear_face[1], color=bear_white, zorder=3);

PageRank

# PACKAGE

# Here are the imports again, just in case you need them.

# There is no need to edit or submit this cell.

import numpy as np

import numpy.linalg as la

from readonly.PageRankFunctions import *

np.set_printoptions(suppress=True)

# GRADED FUNCTION

# Complete this function to provide the PageRank for an arbitrarily sized internet.

# I.e. the principal eigenvector of the damped system, using the power iteration method.

# (Normalisation doesn't matter here)

# The functions inputs are the linkMatrix, and d the damping parameter - as defined in this worksheet.

# (The damping parameter, d, will be set by the function - no need to set this yourself.)

def pageRank(linkMatrix, d) :

n = linkMatrix.shape[0]

M = d * linkMatrix + (1-d)/n * np.ones([n, n])

r = 100 * np.ones(n) / n

lastR = r

r = M @ r

i = 0

while la.norm(lastR - r) > 0.01 :

lastR = r

r = M @ r

i += 1

return r# Use the following function to generate internets of different sizes.

generate_internet(5)

# Test your PageRank method against the built in "eig" method.

# You should see yours is a lot faster for large internets

L = generate_internet(10)

pageRank(L, 1)

# Do note, this is calculating the eigenvalues of the link matrix, L,

# without any damping. It may give different results that your pageRank function.

# If you wish, you could modify this cell to include damping.

# (There is no credit for this though)

eVals, eVecs = la.eig(L) # Gets the eigenvalues and vectors

order = np.absolute(eVals).argsort()[::-1] # Orders them by their eigenvalues

eVals = eVals[order]

eVecs = eVecs[:,order]

r = eVecs[:, 0]

100 * np.real(r / np.sum(r))

# You may wish to view the PageRank graphically.

# This code will draw a bar chart, for each (numbered) website on the generated internet,

# The height of each bar will be the score in the PageRank.

# Run this code to see the PageRank for each internet you generate.

# Hopefully you should see what you might expect

# - there are a few clusters of important websites, but most on the internet are rubbish!

%pylab notebook

r = pageRank(generate_internet(100), 0.9)

plt.bar(arange(r.shape[0]), r);资料

Formula Sheet: Sheet summarising all the formulae covered in this course.